MR Immersive Theatre WICKED

Concept:

MR Immersive Theatre WICKED is a course project for Augmented Reality Module. It is designed to use MR technology to combine the musical storyline with immersive interactive experience, allowing audience to embody into the story world or even act in the musical at the lowest cost. Change the room into a theatre place.

Developed - Unity 3D + Meta All-in-One SDK

Recommend device - Meta Quest 3

Team:

Peiheng Cai, Zoey Wang, Irene Chang, Michael Doyle

My role:

UX Design, Unity development, Motion capture actor

Project Overview

Story:

The experience is based on the musical "WICKED", telling a magic story about the friendship between Elphaba, and Glinda, and how they acted towards the evil wizard differently.

Adaption:

- Adapt the most famous song “For Good” to an immersive experience version

- Combine real world with the magic Oz world scene

- Insert the story line with Karaoke mode

- Allow audience to play as the character and perform with another one.

Show the potential how MR can be used to make the theatre artwork more accessible and immersive .

Why MR

Live musical in real theatres has limitation

-

Not every city has a theatre which has musical shows.

-

Not convenient for the disable to enjoy.

-

Musicals have curtain call. Lack innovative way to commercialize after the curtain call.

Merging of real and virtual worlds

-

The story is in a magic virtual world, but the value of friendship it conveys has practical significance in real life.

-

This is consistent with concept of MR, merging virtual and reality

Steadier environment, less dizzy feeling

-

Although VR may give stronger sense of immersion, the frequent scene change in musical will let audience get dizzy more easily in VR.

-

In MR, the overall real environment stays the same, reducing dizzy.

User Journey

-

Click controller trigger put virtual windows in their real room space.

-

See the magic world outside through the real room.

Virtual Window

-

Click the controller trigger to open Elphaba's magic book

-

Know the what happened between Elphaba and Glinda through a background video.

Props Interaction

-

Follow the line hint panel to know Glinda's line.

-

Hold controller trigger to speak

-

Deliver the lines correctly to Elphaba as if performing the play.

Voice Interaction

-

Follow the Karaoke subtitle to sing "For Good" as Glinda

-

Enjoy the magical visual effect and environment when singing and performing.

Sing Karaoke

Project Timeline

Team Timeline

Oct 17th- Oct31th: Project Predesign & Pitch

-

Confirm to adapt "WICKED"

-

Choose 3 songs to adapt

-

Add Karaoke mode

-

Add magical forest virtual assets to the real room

Nov 1st- Nov 12th: Design

-

Change to focus on one song, "For Good"

-

Add interactions with characters while singing together

-

VFX related to lyrics

-

Add background video to complete narrative

My role

-

Organize team meeting

-

Case Study

-

Brainstorm concept Design

-

Pitch slides for introduction, why MR, related work, technical plan for interaction

-

Pitch delivery

-

Detail the interaction and animation design (using storyboard)

-

Finalize product requirement doc with a complete flow.

Nov 12th - Nov 28th: Progress Demo

-

Character model prototype

-

Scene model prototype

-

Basic UX flow development

-

Develop in Unity

1. Virtual window

2. Background video

3. Voice Detection

4. Insert subtitle

-

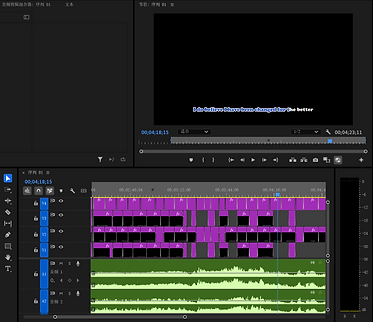

Karaoke subtitle made by Premiere Pro

Nov 29th - Dec 12th: Detailed features development

-

Refined character model

-

Refined scene

-

Complete body animation

-

Complete experience demo

-

Develop in Unity

1. Operation hint UI panels

2. Voice volume detection

3. VFX graph

4. Combine animation to the character

-

Design nnimation list

-

Develop in Unity

1. Operation hint UI panels

2. Voice volume detection

3. VFX graph

4. Combine animation to the character

-

Design nnimation list

Dec 13th - Jan 5th: Motion & Facial Capture -> Refined Demo

-

Record motion & facial capture animation

-

Replace original animation with motion capture one

-

Mocap&FaceCap Actor at VR lab

-

Develop in Unity

Combine body and face animation using animation controller (Base Layer + override layer)

-

Demo video edit

Team Timeline

Oct 17th- Oct31th: Project Predesign & Pitch

-

Confirm to adapt "WICKED"

-

Choose 3 songs to adapt

-

Add Karaoke mode

-

Add magical forest virtual assets to the real room

Nov 1st- Nov 12th: Design

-

Change to focus on one song, "For Good"

-

Add interactions with characters while singing together

-

VFX related to lyrics

-

Add background video to complete narrative

My role

-

Organize team meeting

-

Case Study

-

Brainstorm concept Design

-

Pitch slides for introduction, why MR, related work, technical plan for interaction

-

Pitch delivery

-

Detail the interaction and animation design (using storyboard)

-

Finalize product requirement doc with a complete flow.

Technical Detail

Virtual Window

The virtual scene outside the room is realized by a Skybox video. But in general AR development, skybox will render after the room, so the background of the main camera should be set to color with alpha = 0 to ensure the room will show up, which means we cannot see the virtual world outside.

So, we change the background of camera to Skybox first, and then adjust the render order, as well as Stencil material to develop the feature of virtual window. Finally use Raycast from the controller to put window prefab on the hit point.

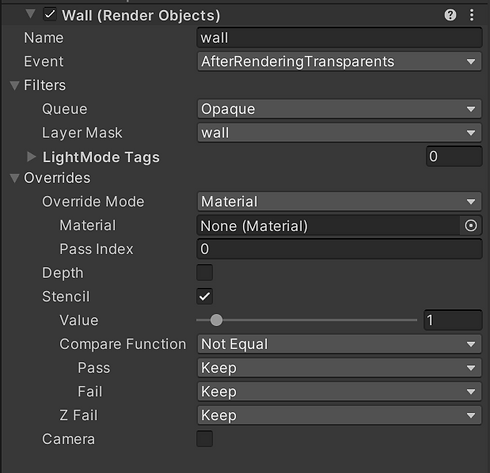

1. Set Rendering Order

make the room show in front of skybox

- Set the MRUK ROOM mesh a "wall" layer and give it a new material called "passthrough" with Render Queue = 2000 (Opaque).

- Set the wall layer as "AfterRenderingTransparent" in render object in Render Pipeline URP-HighFidelity-Renderer

2. Window Material with Stencil

material for virtual window, shows the Kkybox scene on the room wall

- Stencil shader: This shader writes a custom _StencilID value to the stencil buffer, replacing existing values (Pass Replace) with the one whose Stencil == _StencilID, without affecting color (Blend Zero One) or depth buffers (ZWrite Off).

- Create a window material with shader Stencil with Stencil ID = 1 (Replace the area with something StencilID=1) and Render Queue 3000.

- Set the wall layer Stencil != 1 in URP renderer, so that the wall will not show within the window area.

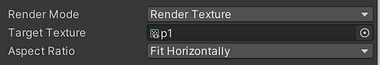

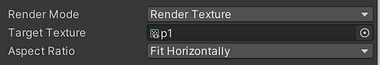

3. Skybox video

where the virtual environment can be seen

- The scene is created in Unreal Engine and is recorded as panoramic video with animation show according to the lyric time.

- Use Render Texture mode to render the skybox.

Virtual Window

The virtual scene outside the room is realized by a Skybox video. But in general AR development, skybox will render after the room, so the background of the main camera should be set to color with alpha = 0 to ensure the room will show up, which means we cannot see the virtual world outside.

So, we change the background of camera to Skybox first, and then adjust the render order, as well as Stencil material to develop the feature of virtual window. Finally use Raycast from the controller to put window prefab on the hit point.

1. Set Rendering Order

make the room show in front of skybox

- Set the MRUK ROOM mesh a "wall" layer and give it a new material called "passthrough" with Render Queue = 2000 (Opaque).

- Set the wall layer as "AfterRenderingTransparent" in render object in Render Pipeline URP-HighFidelity-Renderer

2. Window Material with Stencil

material for virtual window, shows the Kkybox scene on the room wall

- Stencil shader: This shader writes a custom _StencilID value to the stencil buffer, replacing existing values (Pass Replace) with the one whose Stencil == _StencilID, without affecting color (Blend Zero One) or depth buffers (ZWrite Off).

- Create a window material with shader Stencil with Stencil ID = 1 (Replace the area with something StencilID=1) and Render Queue 3000.

- Set the wall layer Stencil != 1 in URP renderer, so that the wall will not show within the window area.

3. Skybox video

where the virtual environment can be seen

- The scene is created in Unreal Engine and is recorded as panoramic video with animation show according to the lyric time.

- Use Render Texture mode to render the skybox.

Voice Interaction

Voice Interaction mainly contains the feature of detecting what the player is saying, transcript the words on the screen, detect whether the words are correct to the lines. The voice detection used wit.ai API, allowing the voice data to be processed on the server, detecting the words according to designers' training model. And then use Meta Voice SDK to link the project to the API, with Understanding Viewer and Response Matcher.

1. Training

on wit.ai

- Train the sentence players need to say with a specific entity(tag).

2. appVoiceExperience

Link Unity project to wit.ai training API

- Set the API with Meta Voice SDK toolkit. Use Understand Viewer and Response Matcher to create the detection for the sentence.

- Once the sentence is detected, it will call on the OnMultiValueEvent,where overall state and character state will be updated.

3. Voice Manager

The event system control it

- Add event listener for start a request, end the request, partially transcripting, transcript complete

- OnRequestInitialized (start) and OnRequestCompleted (end) are used for sound effect

- OnPartialTranscription (partially transcipting)

Karaoke Interaction

Karaoke mode contains two parts, the karaoke subtitle and special effect corresponding to the microphone input volume. When player speaks loud, the microphone model on the controller will size up. And meanwhile, the glowing vine controlled by a VFX graph will grow faster, to make the world more magical.

1. Subtitle

- Made in Promiere Pro, using the mask on colored subtitle

- Adjust the mask size with key frames

- The video has to have an alpha channel. So chose WEBM as the encoder when exporting video.

2. Mic Volume Detect

- Create a clip and write in the voice data microphone gets with a length of sampleWindow.

- Calculate the volume according to the data within sampleWindow length.

- Take the max volume level during the sampling as the volume data at the moment.

- Change the effect according to the range of volume. Map the current volume to the VFX grow speed and the size of microphone model.

More information can be referred to: https://discussions.unity.com/t/check-current-microphone-input-volume/474574

3. VFX Graph

- Mainly use two particle systems, with strip and without strip to simulate the vine and glowing points around the vine.

- For the two system, there is a curve movement and a turbulance for rotation. After changing the particle growing speed to according to the volume, the vine will grow higher or grow lower.

- So the audience can see the magical effect changes when they are singing.

Animation

The animation flow is designed refering to the original musical stage, movie, and other performance clips. Animation for model in the virtual scene is made in UE and exported as video in skybox. The animation for character is recorded by motion capture and face capture in Motive and Unity Face Capture respectively. After recording, body animation will be exported as fbx and facial animation will directly be saved in Asset.

Experience Process Controller

Since this experience is a linear one, there is an overall controller which records the current state of experience and calls on corresponding script to initialize certain features. This controller is also responsible for showing the hint UI.