LOVE.EXE : A Co-op VR Escape Room

This is a real-world and virtual-space collaborative escape room game about AI technology and free will.

As his beloved wife's life nears its end, a programmer husband chooses to upload her consciousness data, training an AI version of his wife. He hopes to use this technology to create a bionic replica, keeping her by his side forever.

But is being digitized what his dying wife truly wants? As digitalized data, will AI wife be willing to be a replica of someone else, unconditionally serving her husband? Can they both escape this dilemma?

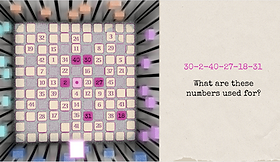

The game involves two players: one plays the real-world wife who is nearing death, while the other plays the AI wife in the VR space. They must work together—restoring damaged paintings through aerial drawing, reconstructing a fragmented comic in a sliding puzzle, and piecing together a Rubik’s cube filled with love memories.

The escape room game integrates real-world and VR collaboration, exemplifying exceptional creativity and innovation in immersive gaming. It blends a narrative story with puzzle experience, allowing players to embody distinct roles in separate dimensions and work together to escape. You are the one in the story and the one who changes the story.

🌟 Credit

Gameplay Design & TA:

Narrative Design:

3D Artist:

Animator:

UI:

Developer:

Ruyin Zhang

Ruyin Zhang | Cuiyi Lin | Peiheng Cai

Weiyan Wu | Zhuoya Wang

Zhuoya Wang

Weiyan Wu

Peiheng Cai | Cuiyi Lin

Storyline

The illusion of “love”

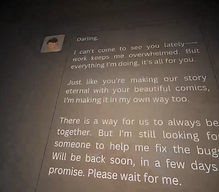

In the name of "love," the husband uploads his dying wife's consciousness, trapping the AI in an endless cycle of restoration and waiting.

The conflict of “love”

The real wife uncovers her husband's scheme, while the AI awakens through endless restoration—does "love" mean sacrifice and control?

The truth of “love”

Defying the husband's plan, the two wives break the information barrier, share the truth, and expose his control and deception.

The escape from “love”

Together, they decode the final password—one regains freedom, the other erases herself, ending the fate controlled by "love."

Project Detail

📝 User Journey

Player1 Physical Space

Start

Find 5 hidden envelopes according to Player2's desciption

Solve envelope clues to help Player2 to finish the puzzles

Get final password from Player 2

Player2 VR Space

End of game

Level1 Start

Get story hints

Puzzle 1 Start

Solve the Sliding Puzzle following instructions from Player 1

Two sides of the cube light up and get a key.

Puzzle 2 Start

Use key to open a locked box & get dolls for music box

Get music box hints from Player 1

Put dolls into the music box in a right order

Another two sides of cube light up & get a drawinig pen in the box.

Puzzle 3 Start

Get 4 drawing gestures from Player 1

Get the hidden information on each cell and form a password

Share the password to Player 1 and input it on the control desk

Jump to correct cell in the new jumping space

Level 3 Start

Two wives connect each other and unveil the truth. The virtual space crashes.

Put dolls into the cube in a right order to feel how free will is formed up.

Recover the Rubik's Cube either by hand or by finding a hidden button

Level 2 Start

The cube is all lighted up, the space fell into chaos, the story goes on

Get painting pieces and recover the paintings

Draw gestures in front of 4 broken paintings

My Contribution

Feature 1: Rubik Cube

Rotate the rubik's cube

Since whether the cube is being rotated needs to be recorded, I chose to write a private method for this feature.

The method returns true when the primary index trigger is held. And a ray from the center of the left controller will be casted. Recorded the movement of the controller, including the position and direction to the main camera position. Let the localrotation of the cube change according to the movement.

This function will be called each frame.

Rotate each face of the rubik's cube

(a) Select the sub-cube

(b) Detect & move the face

The overall cube consists of 27 sub-cubes. Each one has the same rotation as the cube center before being rotated.

1. Subcube Detection & Move Direction Compution

Player needs to use right controller trigger to move the face of the cube. When the trigger is held, using Raycast from the controller to the cube and detect which sub-cube is selected. Record the original controller position too. The local rotation of the selected sub-cube will be the target to find the face.

When the trigger is released, calculate the move direction. And use this direction to select the face.

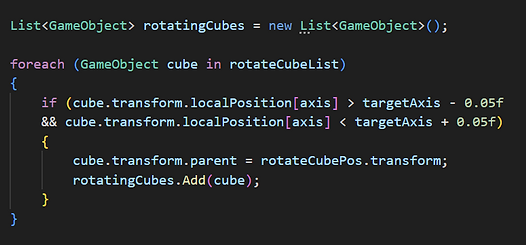

2. Select the face

Create an empty transform to rotate the selected face.

Traverse every sub-cube, if the rotation is the same as the target, then put the sub-cube into the empty transform.

3. Rotate the face

Create a coroutine function to rotate the target face.

The rotation duration is controlled by the "rotationDuration" parameter and the animation is controlled by the Lerp.

Recover the rubik's cube

(a) Assign the 6 faces to 27 subcubes

(b) Check the normal vector for each face

There are 6 faces in one rubik's cube: F, L, B, D, R, U.

If a cube is fully recovered, all the sub-cubes belonging to the same face should have the same rotation.

So each time player rotates a face, call this check function to see whether cube is fully recovered.

Auto-recover button

Since not every one know how the rubik cube works, after playtest 1, we decided to add one "autorecover" feature to help players skip this step.

The button is hidden inside a vase, which should be hinted by Player1.

The vase has the Poke component and a breakable child. After the surface is poked, the vase is broken and the button shows.

Auto-recover process will be excuted after the button is pressed. Instead of applying the original rubik's cube algorithm, we just recorded all the steps done in the Shuffle stage and redo them in the recover stage.

Feature 2: Shaders

6 Faces 6 Stories: Stencil Shader

The rubik cube basically use stencil buffer to show different subscenes inside it.

A sub-scene "n" can only be shown through the cube face "n" with stencil reference = n.

This setting should be done both with shader replacing the pixel with the one with the designated stencil reference. By setting this material as "AfterRenderingTransparent", the subscene content can be shown through the cube face.

Hologram Shader

The hologram shader is implemented in Shader Graph, which mainly consists two parts, glowing color changed by base colo and glitch in vertex position.

The base color consist of two parts:

a) Fresnel Effect: Keep the original texture and apply color on the edge using multiply. The glowing effect is controlled by the emission intensity of the HDR color.

b) Scanning Lines: Move a scan line texture by time and add two scan lines with different widths to form the scanning line effect. Finally, multiply a glitch by randomly generating a number between 0 and 1.

(a) Fresnel Effect

(b) Scanning Line

(c) Glitching

The vertex part creates a dynamic stripe effect based on world space position. It uses sine waves combined with time parameters to generate periodic variations, and Step nodes control the visibility of the stripes. One Sin controls the speed of the strip and another one controls the frequency of showing strips.

Feature 3: Jump Room

Hidden information

Every cell has a hotspot for player to jump, and a box collider to detect whether player comes to this cell. If yes, show the letter, otherwise show the number.

Control Desk

For the control desk class, there is a List which stores every letter player inputs. There are 26 prefabs for the letters. When players input by clicking the letter (Ray Interactable) on the keyboard, the prefab will be instantiated on the right place and added to the list. When players remove letter, the last one in the list will be removed and the GameObject will be destroyed.

Help Tools: UI&Audio

Since the player is part of the story and is one of the character, the main method of narrative is by audio. So the entire game involves lots of audio clips to play and subtitle to do the guidance.

I used a "UIManager" script and an "AudioManager" script to control the UI&Audio, both of which will appear as an "DontDestroyOnLoad" Instance.

When entering a new level, the UIManager will detect the canvas and attatch the corresponding elements on the canvas. All the hints UI and audios are conserved in a list.

There is a method in UIManager to turn off the current hint, show the designated hint, play the audio, and call the function after everything is done.

AudioManagers have lots of methods to play different kinds of audios. It works as a template for the audio with same type, such as background music, narrative voice out audios and sound effects.

These methods help me to easily call the audio when puzzle is solved or when player is operating something.

Additional Feature

Feature: Controller-driven Hand

According to the players' feedback, the experience of seeing controller only in the game will reduce the role identity, breaking the illusion of VR. But all using hands to control everything would make the interaction unpredictable, since there are a lot of uncertainty when players just explore the environment. So I decided to add the feature of controller-driven hands, that is, players still use controllers as the input. However, the controllers will show as virtual hands, with some animations consistent with the player input, such as thumb up, point and fist.

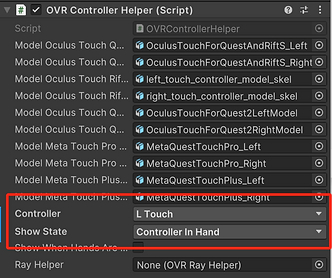

Using Meta SDK, the hand and controller prefabs need to be added under two hand anchors. In the camera rig, the controller driven hand should be set to Natural to see the half-transparent hand models. I still keep the controller's teleportation method as controller-driven hands cannot "pinch".

The keyboard panel is adjusted to use poke interaction instead of ray interaction because it would be more natural to use index finger to poke on keyboard. The effect of putting letters on the above panel is implemented using Interactable Event "OnSelect".